I’m giving a talk today at the Internet2 workshop on

Collaborative Data-Driven Security for High Performance Networks

at WUSTL, St. Louis, MO.

You can follow along

with the PDF.

I’m giving a talk today at the Internet2 workshop on

Collaborative Data-Driven Security for High Performance Networks

at WUSTL, St. Louis, MO.

You can follow along

with the PDF.

There may be some twittering on #DDCSW.

-jsq

I’m giving a talk today at the Internet2 workshop on

Collaborative Data-Driven Security for High Performance Networks

at WUSTL, St. Louis, MO.

You can follow along

with the PDF.

I’m giving a talk today at the Internet2 workshop on

Collaborative Data-Driven Security for High Performance Networks

at WUSTL, St. Louis, MO.

You can follow along

with the PDF.

There may be some twittering on #DDCSW.

-jsq

Good show! What effects did it have on spam? Not just spam from this botnet; spam in general.

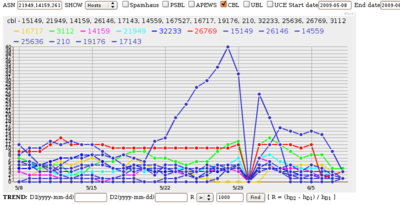

This graph was presented at NANOG 48, Austin, TX, 24 Feb 2010, in FireEye’s Ozdok Botnet Takedown In Spam Blocklists and Volume Observed by IIAR Project, CREC, UT Austin. John S. Quarterman, Quarterman Creations, Prof. Andrew Whinston, PI CREC, UT Austin. That was a snapshot of an ongoing project, Incentives, Insurance and Audited Reputation: An Economic Approach to Controlling Spam (IIAR).

That presentation was enough to demonstrate the main point: takedowns are good, but we need a lot more of them and a lot more coordinated if we are to make a real dent in spam.

The IIAR project will keep drilling down in the data and building up models. One goal is to build a reputation system to show how effective takedowns and other anti-spam measures are, on which ASNs.

Thanks especially to CBL and to Team Cymru for very useful data, and to FireEye for a successful takedown.

We’re all ears for further takedowns to examine.

-jsq

Update: PDF of presentation slides here.

+--------+--------------------+--------------------------+ | type | extended community | encoding | +--------+--------------------+--------------------------+ | 0x8006 | traffic-rate | 2-byte as#, 4-byte float | | 0x8007 | traffic-action | bitmask | | 0x8008 | redirect | 6-byte Route Target | | 0x8009 | traffic-marking | DSCP value | +--------+--------------------+--------------------------+

A few selected points:

Trust issues for routes defined by victim networks.

Research prototype is set up. For questions, comments, setup, contact: http://www.cymru.com/jtk/

I like it as an example of collective action against the bad guys. How to deal with the trust issues seems the biggest item to me.

Hm, at least to the participating community, this is a reputation system.

On Tuesday 2 June 2009, the U.S. Federal Trade Commission (FTC) took legal steps that shut down the web hosting provider Triple Fiber network (3FN.net).

Looking at Autonomous Systems (ASNs) listed in the spam blocklist CBL, Continue reading

Back in February, Verizon announced it would start requiring outbound mail go through port 587 instead of port 25 during the next few months. It seemed like a good idea to squelch spam. Most other major ISPs did it. People applauded Verizon for doing it.

Back in February, Verizon announced it would start requiring outbound mail go through port 587 instead of port 25 during the next few months. It seemed like a good idea to squelch spam. Most other major ISPs did it. People applauded Verizon for doing it.

Unfortunately, it seems that if it had any effect it was short-lived. Looking at anti-spam blocklists on a daily basis, a couple of Verizon Autonomous Systems (ASes), AS-19262 and AS-701, do show dips in blocklist listings on the blocklist PSBL in March. But they don’t last.

Spammers are very adaptable, partly because the botnets they use are adaptable. Good try, Verizon.

This information is from an NSF-funded academic research project at the University of Texas at Austin business school. Thanks to PSBL for the blocklist data.

-jsq

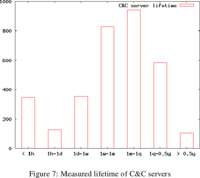

The subject is my interpretation of a sixteen page paper

by a joint Chinese-German project to examine botnets in China.

The subject is my interpretation of a sixteen page paper

by a joint Chinese-German project to examine botnets in China.

Botnets have become the first-choice attack platform for network-based attacks during the last few years. These networks pose a severe threat to normal operations of the public Internet and affect many Internet users. With the help of a distributed and fully-automated botnet measurement system, we were able to discover and track 3,290 botnets during a period of almost twelve months.The paper provides many interesting statistics, such as only a small percent of botnets are detected by the usual Internet security companies. But the main point is exactly that a distributed and adaptive honeypot botnet detection network was able to detect and observe botnets in action and to get data for all those statistics. Trying to deal with an international adaptive botnet threat via static software or occasional centralized patches isn’t going to work.— Characterizing the IRC-based Botnet Phenomenon, Jianwei Zhuge1 , Thorsten Holz2 , Xinhui Han1 , Jinpeng Guo1 , and Wei Zou1 Peking University Institute of Computer Science and Technology Beijing, China, University of Mannheim Laboratory for Dependable Distributed Systems Mannheim, Germany, Reihe Informatik. TR-2007-010

Some readers conclude that this paper shows that reputation services don’t work,because they don’t show most botnets. I conclude that current reputation services don’t work because they aren’t using an adaptive distributed honeypot network to get their information, and because their published reputation information isn’t tied to economic incentives for the affected ISPs and software vendors, such as higher insurance rates.

-jsq

FBI indicts, and in some cases gets guilty pleas or sentences,

eight people they say were involved in botnet-related activities:

FBI indicts, and in some cases gets guilty pleas or sentences,

eight people they say were involved in botnet-related activities:

Secure Computing’s prinicipal research scientist Dmitri Alperovitch was quite happy about the news.Indeed, good news.“We welcome this news and applaud the FBI’s efforts and law enforcement worldwide in attempting to cleanup the cesspool of malware and criminality that the botmasters have promoted,” Alperovitch said in a press release. “Since botnets are at the root of nearly all cybercrime activities that we see on the Internet today, the significant deterrence value that arrests and prosecutions such as these provide cannot be underestimated.”

— FBI Cracks Down (Again) on Zombie Computer Armies, By Ryan Singel, Threat Level, November 29, 2007 | 4:54:32 PM

Now where are the metrics to show how much effect this actually had on number of botnets, number of bots, criminal activities mounted from bots, etc.? Baseline, ongoing changes, dashboard, drilldown?

-jsq

PS: Interestingly, every blog or press writeup I’ve seen about this misuses the word “hacker” to apply to these crackers, yet the actual FBI announcement never makes that mistake: it says cyber crime.

Adam evaluates a New York Times article

about NYC school evaluations, and sums it up:

Adam evaluates a New York Times article

about NYC school evaluations, and sums it up:

The school that flunked has more students meeting state standards than the school that got an A.Measurement is good, but for example in information security if your measurements aren’t relevant to the performance of the company (economic, cultural, legal compliance, etc.), measurement can waste resources or steer the ship of state or company onto ice floes.— Measuring the Wrong Stuff, by Adam Shostack, Emergent Chaos, 9 Nov 2007

-jsq

Why not bootstrap a Fortune 500 Secure Coding Initiative to drive better products, services and share best practices in the software security space?Yes, if the customers demanded it, that might make some difference, and the vendors do pay the most attention to the biggest customers. Of course the biggest customer is the U.S. government, and they seem more interested in CYA than in actual security. And I’m a bit jaded on “best practices” due to reading Black Swans. But regardless of the specific form of better such a group demanded, demanding better security might make some difference.— Secure Coding Advocacy Group, Gunnar Peterson, 1 Raindrop, 23 October 2007

Maybe they could also demand risk management, which would including having watchers watching ipsos custodes. Not just in the circular never-ending hamster wheel of death style, but for actual improvemment.

-jsq

Schedulers can be objectively tested. There’s this thing called "performance", that can generally be quantified on a load basis.

Yes, you can have crazy ideas in both schedulers and security. Yes, you can simplify both for a particular load. Yes, you can make mistakes in both. But the *discussion* on security seems to never get down to real numbers.

So the difference between them is simple: one is "hard science". The other one is "people wanking around with their opinions".

— Re: [PATCH] Version 3 (2.6.23-rc8) Smack: Simplified Mandatory Access Control Kernel, by Linus Torvalds, kerneltrap.org, Monday, October 1, 2007 – 7:04 am

Linus Torvalds, inventor of Linux and thus originator of its associated industry, continues:

Continue reading